Table of Contents

We’ve never had access to more information. Each day, we send nearly 300 billion emails. We add four petabytes of data to Facebook and throw 5 billion inquiries into search engines.

We generate data when we walk with our phones. When we browse the web or exercise with a Fitbit or make a purchase, numbers pour into databases. They record our geographic locations, IP addresses, and purchasing behaviors.

Every aspect of business now generates data, and that generation is only going to increase. The development of 5G infrastructure is predicted to boost data production by a factor of between ten and 100. One estimate of the amount of data stored on servers and hard drives around the world is 44 zettabytes (that’s a number with 21 zeros).

That’s an enormous amount of data, but all of that information is only valuable if we can use it. We need to be able to access and analyze those figures. We have to be able not just to generate data and store it, but also to retrieve it and analyze it.

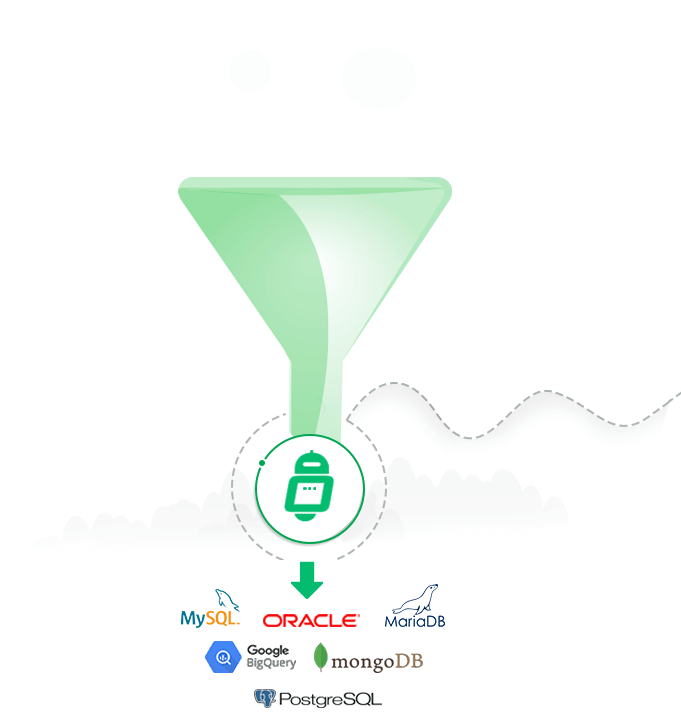

That’s the work of ETL — which stands for “extract, transform, load” — and it’s the subject of our guide. We’ll explore each stage of ETL, discuss where the data is extracted from and where it goes, and introduce some of the tools used in ETL.

What Is ETL?

ETL is the process of extracting data from a source, transforming (which involves cleaning, deduplicating, naming, and normalizing) the data, and then loading it into a data warehouse.

ETL helps to gather all of a company’s data into one place so that it can be mined and analyzed. Businesses can then use it to make better, more informed decisions.

Historically, ETL required developers to extract data from multiple sources. Not only did they need to work with a data source’s complicated APIs, but they also needed to clean the data and format it in a consistent way. They then needed to upload the data in a secure way to a data warehouse.

Today, thanks to tools like ETLrobot, the ETL process is far simpler.

Written By : Steve Gickling – M.S./Founder

Edited By : Emma Kessinger – VP of Account Strategy

Reviewed By : John Rampton – MBA/Entrepreneur/Founder

ETL in retail

Imagine that you’re the owner of an e-commerce company. You stock hundreds of products. You conduct thousands of transactions every day, selling to people all over the world.

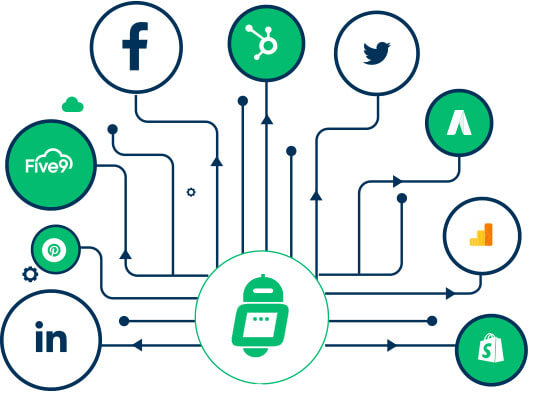

Like most businesses these days, you have data in various cloud-based systems that you need to bring together. Perhaps you have call center data in Five9, sales pipeline and CRM data in HubSpot, sales data in Shopify, advertising data in Facebook and Google, web analytics in Google, payment data in Stripe, and email marketing data in Mailchimp.

Your business is generating all of that data every day and storing it in separate cloud sources. If you could bring it together into one place, you would be better positioned to pull insights from it.

Outside of the enterprise, however, few companies can afford to pay a developer to tap each of their data sources (not to mention the costs of maintaining each source as the APIs change and evolve).

Tools like ETLrobot cost effectively bring all this valuable data together. They make it possible to analyze sales efforts and ad effectiveness against actual sales down to the product level, for example.

But before you can conduct those sorts of analyses, you’ll need to extract the data from each source, transform it into a format that you can use, and load it into your data warehouse.

Let’s start with the extraction.

1. Extraction: What it Is and How it Works

Your business needs to extract data because it’s typically housed within different programs and apps. All your Facebook Ads data is housed on Facebook, for instance, and all your customer data may be stored within Hubspot.

When you gather data from these separate sources, it’s usually unstructured and disorganized. Data is often extracted in large and difficult-to-read XML or JSON formats. This data may need to be structured, cleaned, and reformatted for a data warehouse. Only then can the information be analyzed and used by data scientists and business analysts to make decisions.

In case that isn’t clear, consider the data “mining” metaphor analysts often use. When a mining company extracts a diamond from the ground, it’s rough and dull. A diamond cutter then transforms it into a sparkling stone, and a jeweler loads it into a ring, which is when it delivers its full value.

Unlike diamond mines, though, data mines often come with existing infrastructure. That infrastructure comes in the form of APIs, which are like sockets into which developers can plug software to draw out data. With ETL, all data miners have to do is start the conveyor belts, load on the gems, cut the stones, and use them.

For example, advertisers on Facebook want to know how their ads are performing. They monitor what content their target demographic is responding to and which ads are generating the most interaction.

With that in mind, Facebook provides an API that allows software to extract performance data that advertisers need at the frequency they want. Advertisers might want to extract their data weekly or monthly, depending on the amount of data they’re generating.

The complexity of working with APIs

Data sources have different APIs, so it can be difficult to extract and utilize data when working with a variety of sources. Challenges include:

1. Authentication is the first step to working with an API. Authentication alone can be complex and time consuming to program. While there are as many proprietary authentication methods as there are systems which utilize them, they are largely variations of a few major approaches such as:

- Basic: Basic Authentication is a simple authentication scheme built into the HTTP protocol. This method is rarely recommended due to the inherent security flaws of passing the username and password and should only be used over HTTPS/TLS.

- Bearer: Bearer Authentication (also called Token Authentication) is an HTTP authentication scheme that involves security tokens called “bearer tokens.” The name “Bearer Authentication” can be understood as “give access to the bearer of this token.” Though slightly better than Basic Authentication, this method is also rarely recommended and should only be used over HTTPS/TLS.

- Digest: Digest Authentication was created to solve the security flaws of Basic and Bearer. Digest uses MD5 hashing to ensure that no usernames, passwords, HTTP methods, messages, or requested URIs are sent to the server in plaintext. Digest authentication is a more complex form of authentication because for every call needed, the client must make two. As the message is encrypted, Digest can be used with or without HTTPS, though it is still recommended to use HTTPS.

- OAuth 2.0: OAuth 2 is an authorization framework that enables applications to obtain limited access to user accounts on an HTTP service, such as Facebook, GitHub, and Google. It works by delegating user authentication to the service that hosts the user account, and authorizing third-party applications to access the user account. OAuth 2 provides authorization flows for web and desktop applications, and mobile devices. OAuth 2 is quickly becoming the new standard for API authentication.

2. Data architectures and languages vary. For example:

- SOAP operates with the two basic functions: GET and POST. GET is used to retrieve data from the server, while POST is used to add or modify data.

- REST, in its turn, changes the state of the corresponding source by making a request to the URI (Uniform Resource Identifier).

- GraphQL leverages requests of two types: queries that retrieve data from the server, and mutations that change the data.

3. There are many file formats to support, such as XML, JSON, TOML, CSON, YAML, and CSV. Each has its benefits, cons, and appropriate use cases, and each requires different parsing techniques.

4. A backoff/retry strategy must be implemented for the API calls. An API may be down or its extraction limits may have been reached, in which case it is good practice to have a backoff/retry strategy with some form of an exponential backoff algorithm.

5. Pagination needs to be supported. Data is often too large to extract in a single API call, therefore most sources support pagination. Pagination delivers a page of data of data at a time (500 records, for example). That data must be processed and the next page called until there are no pages left.

2. Transformation: Making it Understandable

The “T” in ETL refers to transforming data. Data transformation is defined as the process of changing data from one structure or format into another structure or format. The result is data that’s easier to use and understand.

Transformation involves the following steps:

1. Implementing a consistent naming strategy

Renaming the extracted data is the first and the simplest part of the process of transforming that data. That step alone requires strategic thought. The greater the clarity an ETL process can bring to the final data delivery, the more valuable that data will be.

2. Cleansing data to remove unwanted characters and to reformat dates

Raw data can be unclean and contain characters that it shouldn’t, such a carriage return or line feed. The process of cleansing involves removing unwanted characters and converting fields, such at dates and times, into a consistent and usable format.

3. Deduplicating records to ensure data integrity

Deduplication of records is essential to data integrity. It ensures, for example, that records such as sales totals aren’t counted more than once.

4. Structuring the data

Structuring data is the most complex part of transformation and involves significant thought and programming.

Data generally comes in unstructured JSON or XML formats. For SQL databases, the data needs to be normalized, or split out, into separate tables. For noSQL databases, such as MongoDB, the data still needs to be restructured and put in a format that is optimized for querying — a process known as “mapping.”

For example, a single Shopify order generates data that needs to be split out into many tables, including: orders, order line items, order discounts, order properties, order tax lines, order notes, order adjustments, order refunds, order shipping lines, and more. The ETL process separates and restructures this data, normalizing it so that the data isn’t duplicated. The user ends up with orders in one table, cross-referenced order line items in another table, and so on.

3. Load: Moving Data to the Data Warehouse

After you extract and transform data, you need to move it into your personal data warehouse for storage. This is the “load” part of “extract, transform, load.”

A data warehouse is a central repository containing integrated data extracted from an external source or from multiple sources. Data warehousing is important and can be expensive, but it doesn’t have to be. The most expensive part of data warehousing is generally the building and maintenance of the ETL process. ETLrobot makes the ETL process affordable, simple, and immediately available.

On Data Security

With so many data breaches these days, securing your data is paramount. Not only does ETLrobot extract your data securely using the latest internet security standards, but it also supports SSH-encrypted tunnels, which ensures your data is protected in transit to your database. Furthermore, ETLrobot acts as a data-passthrough engine and does not keep or store your data. Thus, once your data is transmitted to your data warehouse, it no longer exists anywhere within ETLrobot.

Maintaining Security

The methods companies use to secure data that they extract and hold vary, but they all address the same data security questions. There are a few general principles that hold true across industries:

- Security requires strategy.

You need to know exactly how your data is being stored and accessed. In the event of a security breach, you should know what steps to take. It’s much better to know beforehand how you’d respond to a hacker who has blocked access to your data and is threatening to delete it, for example, than trying to figure out a response as the ransom rises. - Access management is key.

Most data hacks are the result of human error, such as someone clicking a link in an authentic-looking phishing email. The more people have access to data, the more potential points of access an attacker will have to reach that data.

Only the people who need access to your data sets should have access to them. Designate gatekeepers to control levels of access. Sales staff, for example, might need to see the status of leads and their previous purchases, but they don’t need to be able to change that data or download all of it. Gatekeepers can make versions of that data available while keeping others inaccessible. - Simple steps matter.

There are a number of small steps every organization should take to safeguard their data. Passwords should be long (at least 12 characters), unique to each employee, free of word combinations, and composed of numbers and symbols. Data should be regularly backed up, because it’s not just theft that businesses have to worry about. Computers break or go up in flames, so your data should be stored in more than one place.

Dealing With Different ETL Sources

ETLrobot makes connecting the following sources to your data warehouse incredibly simple:

HubSpot ETL

HubSpot provides inbound marketing, sales, customer service, and CRM software. It has a generous free option, which makes it ideal for small businesses as they start to gather leads and contacts, and begin their marketing campaigns.

When you use ETLrobot with your HubSpot account, you’ll be able to extract a variety of resources, including (but not limited to):

- Campaigns

- Companies

- Contact lists

- Contacts

- Deals

- Email events

- Engagements

- Forms

- Owners

- Subscription changes

- Workflows

With this information in your data warehouse, you can drill into your marketing and sales data to get a deeper understanding of your customers, your sales pipelines, and your other business needs.

Five9 ETL

Five9 is a leading provider of cloud contact software, specializing in inbound and outbound call center software. Five9 has been around since 2001, has more than 2,000 customers worldwide, and manages more than 3 billion interactions every year.

Five9’s specialization makes ETL particularly important. In order to make the most of its contact services, you need to tap into its ecosystem of partners. Those partners supply CRM, workplace, management, telephony, and additional services.

When you attach ETLrobot to your Five9 account, you gain the ability to pull all sorts of data, including (but not limited to):

- Agent state

- Call logs

- ACD queues

- Contacts

- Worksheets

- Campaigns

- Users

With this information in your data warehouse, you can get a better sense of your agent performance, ACD statistics, and overall call-center performance.

Qualtrics ETL

At the heart of Qualtrics is its customizable survey software solution. Qualtrics has offices in four countries and works with more than 9,000 companies to manage a range of activities, from customer experience to market research to recruitment surveys.

The company offers what it calls four “cores:” Qualtrics CustomerXM manages customer feedback; Qualtrics EmployeeXM is a tool for HR management; Qualtrics ProductXM helps businesses take product concepts from market assessment to testing to launch; and Qualtrics BrandXM is all about relationship-building and marketing.

Each of those cores produces its own set of data, and Qualtrics offers its own hub, called Qualtrics Core XM, to bring all that data together. The company, however, understands that its customers want to load their data into their own platforms. Dennis Callanan, a Qualtrics software engineer, describes in a two-part blog post the complex framework that the company created to enable this ETL activity.

By connecting ETLrobot to your Qualtrics account, you unlock all sorts of information, including (but not limited to):

- Divisions

- Groups

- Organizations

- Questions

- Users

- Surveys

- Survey metadata

- Survey quotas

- Survey responses

Extracting, transforming, and loading Qualtrics data into your own data warehouse lets you look closely at survey performance and responses, giving you a clearer picture of your customers.

Shopify ETL

Shopify is used by more than a million businesses worldwide to power their online stores. It allows anyone to create an e-commerce site without having to touch a line of code. Clients include small entrepreneurs as well as medium-sized organizations, such as the Brooklyn Museum.

Customers who use a store built with Shopify generate a large amount of data. That data includes lists of customers, orders placed, products sold, inventory stocked, refunds issued, and more. Shopify users can even obtain information on abandoned shopping carts.

It’s easy to see how useful that data can be. Once it’s extracted, transformed, and loaded into an email program, an e-marketer can send more personalized messages. Some might use it to focus their social media marketing, while others may want it to research which products are selling the most. Shopify provides powerful analytics, but the ability to extract that data and analyze it with third-party tools can provide the boost that a small business needs.

What information, exactly, can ETLrobot pull from your Shopify account? It includes, but isn’t limited to:

- Customers

- Orders

- Products

- Transactions

- Refunds

- Checkouts

- Returns

Get more details about orders, returns, product trends, and customer behaviors by porting this information into your data warehouse.

GitHub ETL

If there’s one platform that should support the free movement of data, it’s GitHub. Although the platform is now owned by Microsoft, it was created as a place for developers to meet and share code via repositories. GitHub provides statistics about each repository, but those reports tend to be fairly limited.

Get a variety of information from your GitHub account by attaching it to ETLrobot, including (among other things):

- Collaborators

- Comments

- Commits

- Issues

- Pulls

- Reviews

- Assignees

Use this data to get a better grip on the performance of your software engineers and quality of your code. And if you’re pulling data from GitHub, you may as well stick around and read what other ETL tools developers are working on.

Google Analytics ETL

First, let’s take a moment to recognize how nice it is of Google to provide every website with a free analysis tool. And Google Analytics is a good stats tool at that: Website owners can see where their visitors are coming from, how they arrived, and what they do when they reach the site.

All of that information is valuable. It lets you see where to focus your marketing efforts, which pages are the most interesting to readers, and which search terms are the most used — and generate the most leads.

Unfortunately, Google doesn’t make it easy to extract that data. There’s no “export” button in its long list of options, though Google can automatically extract and load data from your Google Ads account.

Nonetheless, it is possible to use the ETL process to get data from your Google Analytics. By doing so, you can look deeper at data including:

- Account summary

- Campaign performance

- Mobile performance

- Traffic acquisition

- Keyword analytics

- Landing page analytics

By using an ETL tool to look deeper into Google Analytics data, you’ll learn more about how specific pages of your site perform, which devices your users access your site through, and where SEO opportunities exist.

LinkedIn Ads ETL

LinkedIn is a site for professional networking. It’s used by members of many fields to make themselves available for job offers and to connect with other people in their line of work. LinkedIn is also a popular advertising platform for generating brand awareness and engagement.

Why bother linking ETLrobot to your LinkedIn account? In doing so, you’ll gain access to:

- Ad campaigns

- Ad analytics

- Ad audience count

Advertising performance, outreach effectiveness, and more are at your fingertips with this integration. It’s particularly valuable to look at how LinkedIn ads data compares to other ad- and sales-related data sources.

Salesforce ETL

Salesforce is one of the world’s most popular CRM tools. It allows companies to build lists of contacts, categorize them into leads, and mark some as opportunities. Each company can have multiple accounts, each of which can contain the kind of data that’s the lifeblood of a business.

By apply ETLrobot to your Salesforce account, you’ll gain new insights from data about your:

- Accounts

- Contacts

- Leads

- Opportunities

- Users

Which lead sources are working, and why? Which lead sources might you be able to do more with? The first step to answering those questions is getting Salesforce data into your data warehouse.

Stripe ETL

Companies use Stripe to make digital transactions. Founded in San Francisco in 2009, Stripe is one of today’s most popular payment interfaces due to its integrations and ease of use.

If you have a Stripe account, connecting ETLrobot to it enables you to pull insights from these (and other) categories of payment data:

- Plans

- Subscriptions

- Customers

- Products

- Invoices

- Charges

- Coupons

- Transfers

Learn from your Stripe data not just how much revenue you’re bringing in each month, but also from which sites, currencies, and customers. If Stripe is not your only payments tool, compare it to revenue collected through other means.

Apple App Store ETL

Mobile apps are becoming increasingly important business tools. As a result, it’s increasingly important to know how well your app is received by its users and why.

There are a couple of key types of data you’ll be able to dig deeper into when you integrate ETLrobot with your Apple App Store account:

- App details

- User reviews

Who are your users, and what do they like and dislike about your app? Answer those questions for yourself by pulling Apple App Store data into your data warehouse. Bucket reviews, and help product developers prioritize new features.

Google Ads ETL

Yes, Google Analytics will show the effectiveness of a Google Ads campaign. But the information stored directly in your Google Ads account is much more detailed. Among other things, it contains the values and responsiveness of particular keywords, as well as the results of different ad copy.

Running a Google Ads campaign is a complex process that requires experimentation and testing. You need to track your results carefully and make adjustments before you can hit on the right combination of targeting and messaging. ETL is a valuable tool for doing just that.

What might you learn by linking ETLrobot to your Google Ads account? Go deeper with data, including your:

- Accounts

- Campaigns

- Ad groups

- Ads

Ad performance and conversion rates are, of course, two areas to investigate once your Google Ads information is stored securely in your data warehouse. But by blending those details with sales data, you’ll get a true glimpse of the impact Google Ads is having on sales.

Mailchimp ETL

Whatever your digital marketing needs, you can tackle them in Mailchimp. Mailchimp makes it easy to create, edit, and manage all sorts of online content. Although it began in the email marketing space, Mailchimp has since expanded to web copy, whitepapers, and more. Plus, Mailchimp’s metrics make campaign review seamless. It displays audience demographics, as well as performance figures, such as clicks, opens, and views.

Mailchimp’s ETLrobot integration lets you get a closer look at:

- Unsubscribes

- Campaigns

- List Segments

- Lists (Audiences)

- List Members

- List Segment Members

- Automations

Say you’re interested in seeing how frequently website visitors click versus email newsletter subscribers. Pull your Mailchimp data, and you may stumble on new ways to engage the people on your subscriber list.

Microsoft Advertising ETL

Previously known as Bing Ads, Microsoft Advertising provides pay-per-click ads associated with specific keywords. The challenge is bidding enough to beat out other advertisers on the platform without bankrupting yourself during the actual campaign. Whenever someone clicks on an ad for a term you won, you pay the amount you bid.

What can you do with Microsoft Advertising’s ETLrobot integration? Get your data out of the cloud for a better look at your:

- Ads

- Keywords

- Ad Groups

- Accounts

- Campaigns

Especially if you use Google Ads and Microsoft Advertising, it’s important to use an ETL solution to make sure you’re maximizing your investment. Compare what the platforms charge for keywords, their users’ search patterns, and their respective conversion rates.

Google Search Console ETL

Not to be confused with Google Ads, Google Search Console gives you a way to manage and optimize how your site shows up for Google search queries. Search Console simplifies SEO work, especially if your website is relatively young.

Why integrate Google Search Console with ETLrobot? Doing so helps you dig into key ad metrics, including:

- Impressions

- Performance

- Clicks

- Clickthrough rate

Perhaps you use both Bing and Google ads. By comparing the two sets of data, you can shift your spend toward whichever one gives you more traffic and clicks for your money.

Zendesk Support ETL

Does customer support stress you out? Zendesk Support makes it easier to track, manage, and follow up on customer support needs. The beauty of Zendesk Support is that it’s omnichannel: The platform works across social media, chat, email, phone, and more. Zendesk’s all-in-one model makes it easier to resolve tickets quickly, tackle customer questions, and keep your customer support team on track.

What Zendesk Support data can an ETLrobot integration help you zoom in on? Review your:

- Groups

- Tickets

- Ticket Fields

- Ticket Audits

- Ticket Metrics

- Organizations

- Satisfaction Rankings

- Users (End Users and Agents)

Don’t underestimate Zendesk Support’s built-in tools. Remember, though, that it’s limited to the customers who actually file a ticket. How do those people compare to your greater customer base? Through an ETL integration, you can focus on selling to customers groups that need less support.

Best Practices for Naming Data

You know that your data source contains all sorts of useful information. But you likely have no idea what specific information that source contains.

Take Apple Store data, for example. You might want to see how much time your users spend in each of your company’s programs. But you could also get data about which iOS versions your users’ phones are running, where your users are located, and a heap of other information that’s interesting but not particularly helpful.

That data could bury the information you actually need. And the information you do need might be labeled in the source with a term that’s clear to a data platform but not clear to an end user.

For the data source, those pieces of information are attributes to be manipulated; for an end user, they’re facts that need to be understood and used. That means that they need to be renamed so that they’re easily identifiable by human beings.

How to rename extracted data

The process of renaming extracted data depends on the ETL tool you’re using. Many ETL programs offer a data preview. That preview typically looks like a table made up of numbers and variables.

In some cases, you may be able to click on the header of each column and rewrite the name of that column. The previous name typically remains visible so you can see where this data comes from in the source.

The ETL process makes it easy to repeat the renaming for multiple categories or columns of data. It also involves naming those steps so you can track the process of extraction and transformation that each piece of data has undergone.

Common data-naming questions

No matter the data you’re working with, you want to be clear with your naming process. If you can’t understand it quickly and easily, then what’s the point?

One key issue is how you name the data fields. ETL is often performed by programmers. They’re technicians who are used to playing with variables and attributes, and whose approach to data is logical. They tend to value precision, and their focus tends to be on the numbers rather than on their descriptions.

The people who use your data, though, are not technicians. They are probably marketers, board members, managers, and team leaders. Your ETL specialists need to be able to follow the steps your data has taken from extraction through transformation to loading — but end users just want to see the end result. They want tables with information that they can understand.

Before ETL technicians rename extracted data, it’s worth taking time to meet with the people who are going to use that data. They should ask questions like:

- What data do you want to see?

- What are you going to do with that data?

- In what format do you want that data?

- What are the most important pieces of information you want to review?

- How do you want to manipulate and review that data?

For the technicians who oversee the extraction, transformation, and loading of data, those in-house team members are their customers.

Working effectively with those internal stakeholders requires ETL experts to extract data in a way that makes it easy to understand and use in the future.

Types of Data Extraction

Data extraction and transformation can take a couple of different forms:

Full Extractions

The biggest and most cumbersome type of data mining is full extraction. These often take place when you first need to move data from its source to another destination. An ETL tool will discover and map the data before transforming it into a form that the target application can use.

Incremental Extractions

The next time an extraction takes place, an ETL tool may extract only the data that the source has added or changed. This incremental extraction requires the added or changed data in the source to be time-stamped. The transformation should also produce a change table, which can help users to see the changes.

The process of incremental extraction is faster than a full extraction and uses fewer resources. But it also increases the risk of bugs in the extraction code. Changed data, for example, could remain in the source.

The trigger for an incremental extraction might be time-based. You can set the software to extract changed data at the end of each day, week, or at any other frequency. The more frequent the extraction, the greater your access to the data — but also the greater the draw on your resources.

Alternatively, you can also define specific events as triggers to begin the extraction process. For example, you could conduct data extraction automatically when the source adds a new client account, records a new delivery, or begins a new production cycle.

Each of those triggers could initiate the process of finding the data, mapping it, transforming it, and loading it into the destination application.

Types of Data Warehouses

Now that you know how ETL works and what it can do, let’s dive into a few different warehouses you may want to extract data to:

Amazon Web Services

When Amazon built AWS, it didn’t simply build giant warehouses filled with servers and then write the best software to query that data; it also copied much of the open-source data management software that other companies were building. As the company’s services have grown, it’s come to define much of what’s possible and what’s developing in cloud technology, including data management.

For business customers, AWS is understandably appealing. It’s easy to use, and Amazon has tried to cover every possible use case.

The company’s main data solution is Redshift, which serves companies of all shapes and sizes. Customers include Lyft, Pfizer, McDonalds, and DuoLingo. Extracting data to Redshift is as simple as using the “Copy” command to load data into tables. (Amazon recommends not using “Insert,” which it says is “prohibitively slow.”) The tables can then be updated with data manipulation commands to edit specific rows and columns.

Other loading processes are more complex and more expensive. Loading data into Redshift from Amazon S3, for example, means first downloading files in comma-separated value, character-delimited, and fixed-width formats. Users then have to create an Amazon S3 bucket to upload the data files, launch a Redshift cluster, and create database files. After copying the data files into the tables, they need to troubleshoot and correct any errors. Amazon estimates the time required for this process at an hour — and charges $1 per hour for each cluster.

Google Cloud

Amazon got a headstart on cloud data services, but other tech giants were not content to cede the field entirely to Jeff Bezos.

Google Cloud offers a broad range of online services, including BigQuery, a data warehousing service. Aimed at enterprises, it promises speedy SQL queries that leverage Google’s processing infrastructure.

Customers need only load their data into BigQuery to access it using Cloud Console, a web UI, a command-line tool, or the BigQuery REST API. Google also notes that customers can use a range of third-party tools to visualize and load their data.

That data can be loaded into BigQuery from cloud storage providers, other Google services, or any readable data source. Data can be placed into a new table or partition, or it can be added to an existing table or partition.

The good news is that Google doesn’t charge for loading data, though it does charge for storing it. The first 10 gigabytes are free for active and long-term storage, but rates for additional storage depend on what you plan to do with the data you’ve loaded. On-demand queries, for example, cost $5 per terabyte (the first terabyte is free). Monthly queries can cost as much as $10,000 per 500 slots.

Microsoft Azure

The last of the three major data warehouses comes from Microsoft. Azure started in 2008 as “Project Red Dog.” Like AWS and Amazon Cloud, it now provides a broad range of cloud-based computer services, including storage, hosting, media, content delivery, and data management.

Azure comes with its own import-export service that provides only one way to load data into the platform. It’s not straightforward: You need an Azure Storage account and a sufficient number of disks — either an internal SSD or HDD (You can’t import from a USB or an external HDD). You also need to be running Windows and using Microsoft’s own import-export tool, WAImportExport version 2.

To deliver the disks to Microsoft, you also need a FedEx/DHL account. If you fill the disks, you have to check a box in your account, and provide the carrier and tracking number. You then have to track the progress of your job.

That’s not a particularly user-friendly way to import information into a data warehouse. It might be a useful way to offload an archive, but it’s not a solution for data that needs to be regularly uploaded and updated from a separate source.

There are other ways to load data into Azure, of course. Microsoft explains how to use PolyBase, a data tool for SQL servers, to load data from Azure Blob, a place to store unstructured data, into the Azure portal. It’s a process that requires first creating a data warehouse, setting up a server-level firewall, creating external tables, and using a CTAS T-SQL statement to load the data.

That route might be more practical than asking DHL to send old disks to a data center, but it’s still not straightforward or easy. It requires technical skills, time, and enough attention to detail to make sure that no mistakes are made.

Tools for Extracting Data

Clearly, the ETL process depends on a number of elements. It needs a source from which the data will be extracted and a destination into which the data will be loaded. It also needs data that you’ve collected and want to query.

Extracting that data, transforming it, and loading it into a new platform requires a special tool.

There’s no shortage of data extraction tools, many of which have been designed to extract a particular kind of data:

ETLrobot

Businesses with needs broader than financial data or Amazon sales have a limited number of ETL options. ETLrobot offers users a simple setup process and clear pricing that starts as low as $99 per month for 5 million rows of data. The rest happens behind the scenes as the program extracts data, transforms it, and loads it into the customer’s chosen data warehouse.

HubDoc

HubDoc specializes in financial data. The program scans receipts, emails, bills, and statements to extract financial information and store it all in one place. It aims to make invoicing, bill-paying, and financial management easier. It’s not the kind of tool that will transform a business’s marketing efforts, but it’s helpful in an area where data analysis can make a major bottom-line difference.

Parseur

Parseur does something similar to HubDoc. Instead of focusing on financial data, however, it extracts text from emails, PDFs, sales orders, and more. So that users do not need to copy information from a page and paste it into a database, Parseur provides an automated process. It lets you create the fields you need and choose the information you want to fill them with. Like HubDoc, it’s not a tool that lets you direct a constant flow of data into your website. But it can save you a great deal of manual data entry.

A2X

A2X is another targeted ETL tool. It’s aimed at Amazon sellers who can use it to automatically fetch their Amazon transaction data. It works with Quickbooks and Shopify, as well as with Amazon, and it shows that ETL isn’t just for enterprises. A2X is tailored for small- and medium-sized businesses with particular needs.

Matillion

Matillion offers a broad ETL platform. It has 70 pre-built connectors to suck up data from common sources, such as Gmail, Facebook, and Salesforce. Matillion’s data-warehouse connections, though, are more limited. Matillion’s customers can load data into Amazon Redshift, Google BigQuery, or Snowflake, a rival service.

Funnel

Funnel is broader in its integrations than Matillion. The product hooks up with more than 500 data sources. Funnel can export the data to nearly any destination. Marketing data can be moved into a business intelligence system using Funnel, and Google Data Studio can take that data and show it off in all sorts of exciting ways. Funnel can also upload data to Google Sheets, as well as create dashboards and reports. It’s not cheap, though: Prices start at $499 per month, so Funnel isn’t an option for very small firms with limited amounts of data.

Tools for Data Analysis and Visualization

ETLrobot can help you get all your data, not just a few key metrics, into your data warehouse. It gives your analysts, data scientists, and other team members the freedom to choose and use their favorite tools to best represent and understand the data they’ve exported.

Some popular data analysis and visualization tools are:

- Tableau

- Looker

- Chartio

- Sisense

All of these tools provide ways to compare data in helpful graphs and dashboards. Exporting the data is vital, but it’s also important to have a plan of action for how to best utilize the information to make better business decisions for your team.

ETL for Business

ETL is an essential business process. Every company in every industry generates reams of data. They gather information about their products, their processes, their customers, their transactions, and their marketing campaigns.

All of that information is valuable. But in order to maximize the value of that data, it needs to be in a form that team members can read and query.

ETL programs accomplish that, but they need to be easy to use. They need to be effective. They have to be able to extract data quickly and load it into a data warehouse securely. And they need to be affordable.

Once you develop on a solid ETL process, you’ll have all you need to make the most of the data you’ve collected.